3D Model AI Generation Technology Principles In-Depth Analysis: From Neural Networks to TRELLIS Models

AI-generated 3D model technology is developing rapidly, yet the underlying technical principles remain little known. From early GANs to the latest TRELLIS models, how do these technologies achieve the magical transformation from text descriptions to exquisite 3D models? This article will delve into the technical core to unveil the mysteries of AI 3D generation.

Development History of AI 3D Generation Technology

Early Exploration Phase (2015-2019)

GAN-Based 3D Generation

The earliest 3D generation attempts were based on Generative Adversarial Networks (GANs). Models like 3D-GAN could generate simple 3D shapes by learning 3D voxel data. However, limited by computational resources and data quality, generated models had low resolution and limited detail.

Technical Characteristics:

- Based on voxel representation, typically 32³ or 64³ resolution

- Fast generation but limited quality

- Mainly used for simple geometric shape generation

- Lacked fine surface details

Technical Challenges:

- Memory consumption increased cubically with resolution

- Difficult to generate high-quality surface textures

- Training data acquisition difficulties

- Limited diversity in generation results

Deep Learning Breakthrough Period (2020-2022)

Neural Radiance Fields (NeRF) Revolution

The emergence of NeRF technology marked a major breakthrough in the 3D generation field. By using neural networks to represent volumetric density and color information of 3D scenes, NeRF could reconstruct high-quality 3D scenes from 2D images.

Core Innovations:

- Using Multi-Layer Perceptrons (MLP) to represent 3D scenes

- Generating 2D images through volumetric rendering

- Supporting rendering from arbitrary viewpoints

- Handling complex lighting and materials

Technical Architecture:

Input: (x, y, z, θ, φ) → MLP → (density, color)

Rendering: Volume integration → 2D image

Optimization: Reconstruction loss minimization

Introduction of Diffusion Models

The success of diffusion models in image generation inspired researchers to apply them to 3D generation. Through a gradual denoising process, diffusion models could generate high-quality 3D structures.

Modern AI Generation Era (2023-Present)

Multi-Modal Large Model Applications

The development of Large Language Models (LLMs) and vision-language models brought new possibilities to 3D generation. By understanding natural language descriptions, AI could generate 3D models that meet semantic requirements.

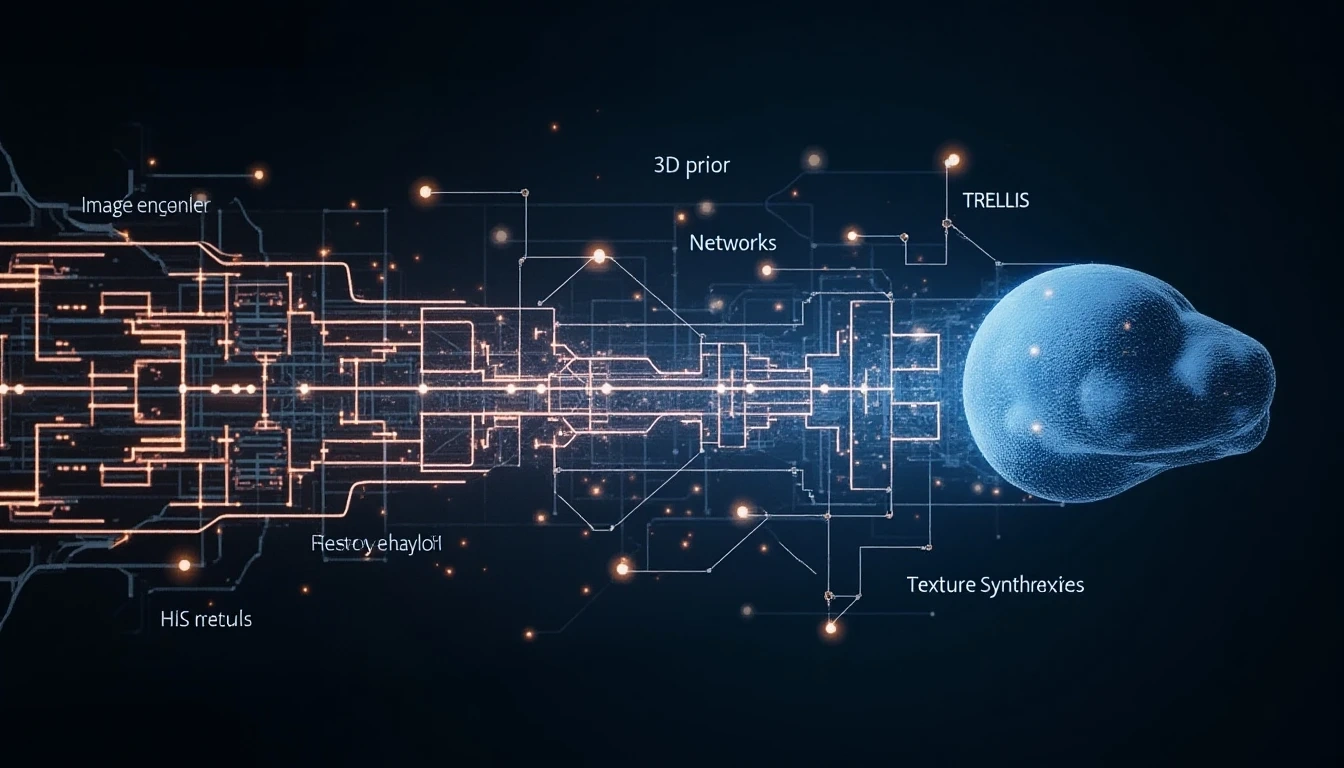

TRELLIS Model Breakthrough

The TRELLIS model used by platforms like Open3D.art represents the most advanced 3D generation technology currently available, achieving rapid conversion from images to high-quality 3D models.

TRELLIS Model Technical In-Depth Analysis

Model Architecture Overview

TRELLIS (TRee-structured Efficient Large-scale 3D Lattice for Image-to-Shape) is an advanced model specifically designed for image-to-3D conversion.

Core Components:

- Image Encoder: Extracts features from input images

- 3D Prior Network: Understands 3D geometric structures

- Mesh Generator: Creates 3D mesh structures

- Texture Synthesizer: Generates surface materials

Technical Innovation Points

1. Hierarchical Mesh Representation

TRELLIS adopts a hierarchical mesh representation method that can construct 3D models at different detail levels:

Coarse Layer → Medium Layer → Fine Layer

Geometric Shape → Surface Details → Texture Information

2. Attention Mechanism Application

Through the attention mechanism of Transformer architecture, the model can focus on key features in images and map them to 3D space:

- Spatial Attention: Identifies spatial structure of objects

- Feature Attention: Extracts important visual features

- Cross-Modal Attention: Connects 2D images and 3D geometry

3. Geometric Constraint Optimization

The model integrates multiple geometric constraints to ensure generated 3D models are physically reasonable:

- Topological Consistency: Ensures model connectivity

- Surface Smoothness: Avoids unnatural sharp edges

- Proportion Reasonableness: Maintains correct object proportions

Training Data and Process

Dataset Construction

TRELLIS model training requires large amounts of 2D-3D paired data:

- Synthetic Data: Generated through 3D rendering engines

- Real Data: Collected using 3D scanning devices

- Augmented Data: Expanded through data augmentation techniques

Training Strategy

Multi-Stage Training:

- Pre-training Phase: Pre-train on large-scale synthetic data

- Fine-tuning Phase: Fine-tune on real data

- Adversarial Training: Use discriminators to improve generation quality

Loss Function Design:

Total Loss = Reconstruction Loss + Geometric Loss + Adversarial Loss + Regularization Loss

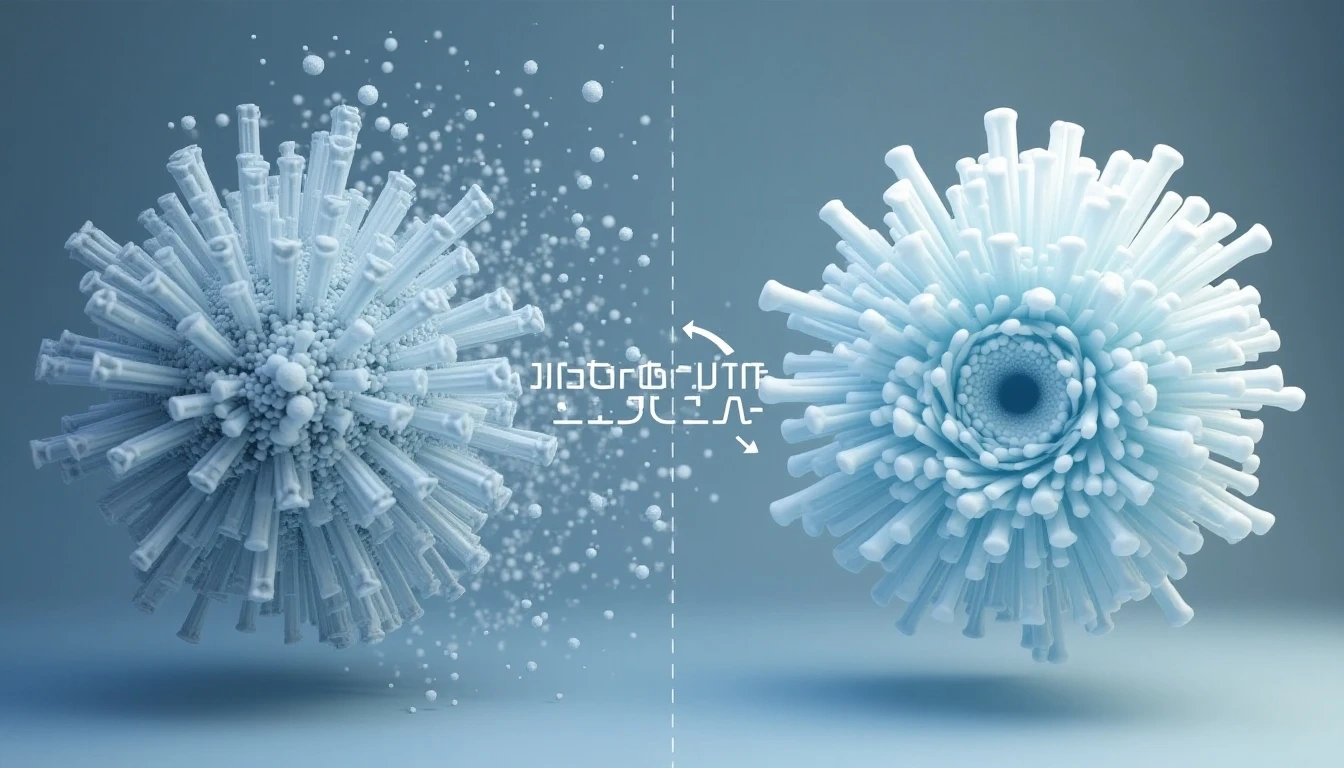

Diffusion Model Applications in 3D Generation

Diffusion Process Principles

Diffusion models generate 3D structures by simulating diffusion processes:

Forward Process: Gradually add noise to 3D data Reverse Process: Gradually denoise through neural networks to recover 3D structure

Technical Challenges in 3D Diffusion

High-Dimensional Data Processing

The high-dimensional nature of 3D data brings computational challenges:

- Enormous memory requirements

- Long training times

- Need for specialized optimization strategies

Geometric Consistency Guarantee

Ensuring generated 3D models are geometrically reasonable:

- Surface continuity

- Topological correctness

- Physical feasibility

Solutions and Optimizations

Latent Space Diffusion

Performing diffusion in latent space rather than original 3D space:

- Reduces computational complexity

- Improves training efficiency

- Maintains generation quality

Conditional Diffusion

Guiding generation process through conditional information:

- Text conditions: Generate based on text descriptions

- Image conditions: Generate based on reference images

- Category conditions: Specify object categories

Multi-Modal Fusion Technology

Text-to-3D Implementation

Language Understanding Module

Using pre-trained language models to understand text descriptions:

- Entity recognition: Identify object names and attributes

- Relationship understanding: Understand spatial relationships between objects

- Style parsing: Extract artistic style information

Text-3D Mapping

Mapping text features to 3D space:

Text Embedding → Feature Transformation → 3D Generation Conditions

Image-to-3D In-Depth Analysis

Single-View 3D Reconstruction

Challenges in reconstructing complete 3D models from single images:

- Occlusion Problem: Inferring occluded parts

- Depth Ambiguity: Depth uncertainty in 2D projections

- Viewpoint Bias: Information limitations from single viewpoint

Solution Strategies:

- Prior Knowledge: Utilizing geometric priors of object categories

- Shape Completion: Inferring complete shapes from visible parts

- Multi-Scale Analysis: Reconstruction at different resolutions

Real-Time Generation Technical Implementation

Computational Optimization Strategies

Model Compression

Reducing model size and computational load:

- Knowledge Distillation: Small models learning from large models

- Quantization Techniques: Reducing numerical precision

- Pruning Methods: Removing unimportant connections

Parallel Computing

Fully utilizing GPU parallel capabilities:

- Batch Processing Optimization: Processing multiple requests simultaneously

- Pipeline Parallelism: Simultaneous execution of different stages

- Model Parallelism: Distributing large models across multiple GPUs

Caching and Pre-computation

Result Caching

Caching common requests:

- Feature Caching: Caching intermediate feature representations

- Result Caching: Caching final generation results

- Intelligent Invalidation: Similarity-based cache invalidation

Pre-computation Optimization

Pre-computing common components:

- Basic Shape Library: Pre-computing common geometric shapes

- Material Templates: Pre-defined material parameters

- Style Filters: Pre-computed style transformations

Quality Assessment and Control

Evaluation Metric System

Geometric Quality Metrics

- Chamfer Distance: Measures difference between two point clouds

- Earth Mover's Distance: Evaluates distribution differences

- Normal Vector Consistency: Checks reasonableness of surface normals

Visual Quality Metrics

- SSIM: Structural Similarity Index

- LPIPS: Learned Perceptual Image Patch Similarity

- FID: Fréchet Inception Distance

User Experience Metrics

- Generation Speed: Time from input to output

- Success Rate: Proportion of reasonable results generated

- User Satisfaction: Subjective evaluation results

Quality Control Mechanisms

Multi-Level Validation

Establishing multi-level quality inspection systems:

- Geometric Validation: Check basic geometric properties

- Semantic Validation: Ensure compliance with input descriptions

- Aesthetic Validation: Evaluate visual appeal

Automatic Repair

Automatic repair of common issues:

- Mesh Repair: Fix damaged mesh structures

- Texture Optimization: Improve texture quality

- Proportion Adjustment: Correct unreasonable proportions

Technology Development Trends and Challenges

Future Development Directions

Higher Generation Quality

- More refined geometric details

- More realistic material representation

- More accurate semantic understanding

Faster Generation Speed

- Model optimization and compression

- Hardware acceleration technology

- Distributed computing architecture

Stronger Controllability

- Precise parameter control

- Interactive editing capabilities

- Style transfer functions

Technical Challenges

Computational Resource Requirements

3D generation still has high computational resource demands:

- GPU memory limitations

- High training costs

- Need for inference speed optimization

Data Quality and Diversity

Difficulty in obtaining high-quality training data:

- High 3D data collection costs

- Enormous annotation workload

- Unbalanced data distribution

Generalization Ability

Limited model generalization in new scenarios:

- Domain adaptation problems

- Long-tail distribution handling

- Zero-shot learning capabilities

Technical Considerations in Practical Applications

Platform Architecture Design

Microservices Architecture

Splitting 3D generation services into multiple microservices:

- Preprocessing Service: Handle user inputs

- Generation Service: Execute AI model inference

- Post-processing Service: Optimize generation results

- Storage Service: Manage models and data

Load Balancing

Reasonable allocation of computational resources:

- Request Distribution: Allocate requests to different servers

- Resource Monitoring: Real-time system load monitoring

- Elastic Scaling: Automatic resource adjustment based on demand

User Experience Optimization

Progressive Loading

Providing better user experience:

- Preview Generation: Rapid generation of low-quality previews

- Incremental Optimization: Gradually improve model quality

- Background Processing: Perform time-consuming computations in background

Error Handling

Gracefully handling various exceptional situations:

- Input Validation: Check validity of user inputs

- Service Degradation: Provide simplified services during system overload

- Error Recovery: Automatic retry and error correction

Open Source Ecosystem and Technology Democratization

Open Source Frameworks and Tools

Core Frameworks

- PyTorch3D: Facebook's 3D deep learning library

- Kaolin: NVIDIA's 3D deep learning toolkit

- Open3D: Open source 3D data processing library

Pre-trained Models

- Hugging Face 3D Hub: Collection of pre-trained 3D models

- ModelNet: Standard 3D model dataset

- ShapeNet: Large-scale 3D shape database

Technology Democratization

Lowering Technical Barriers

Reducing usage barriers through tools and platforms:

- Visual Interfaces: Programming-free operation interfaces

- API Services: Simple interface calls

- Template Libraries: Pre-defined generation templates

Education and Training

Promoting technical knowledge dissemination:

- Online Courses: Systematic technical education

- Technical Documentation: Detailed usage guides

- Community Support: Active developer communities

Summary and Outlook

AI 3D generation technology is developing rapidly. From early simple geometric shape generation to current high-quality, diverse model generation, technological progress is remarkable. The emergence of advanced models like TRELLIS marks this field's entry into the practical application stage.

Technology Maturity:

- Generation quality has reached commercial standards

- Generation speed meets real-time application requirements

- Multi-modal input supports rich application scenarios

Development Opportunities:

- Continuous advancement in computational hardware

- Continuous enrichment of training data

- Rapid expansion of application scenarios

Future Trends:

- Higher quality generation effects

- Stronger user control capabilities

- Wider application adoption

For technical developers and researchers, deeply understanding these technical principles not only helps better use existing tools but also lays the foundation for future technological innovation.

With the popularization of platforms like Open3D.art, AI 3D generation technology is moving from laboratories to mass applications. In this era of rapid technological development, maintaining attention to and learning about cutting-edge technologies will help seize future development opportunities.